Match Graph - Multiple Supply Demand (DC)¶

The following is a complete example, using the Python API, of matching stores (demands) to depots/trucks (suppliers) via the /match/graph endpoint using an underlying graph created from a modified OpenStreetMap (OSM) dataset. For more information on Network Graphs & Solvers, see Network Graphs & Solvers Concepts.

Prerequisites¶

The prerequisites for running the match graph example are listed below:

- Kinetica (v.

7.0.2or later) - Graph server enabled

- Python API

Match graph scriptD.C. roads CSV file

Python API Installation¶

The native Kinetica Python API is accessible through the following means:

- For development on the Kinetica server:

- For development not on the Kinetica server:

Kinetica RPM¶

In default Kinetica installations, the native Python API is located in the

/opt/gpudb/api/python directory. The

/opt/gpudb/bin/gpudb_python wrapper script is provided, which sets the

execution environment appropriately.

Test the installation:

/opt/gpudb/bin/gpudb_python /opt/gpudb/api/python/examples/example.py

Important

When developing on the Kinetica server, use /opt/gpudb/bin/gpudb_python to run Python programs and /opt/gpudb/bin/gpudb_pip to install dependent libraries.

Git¶

In the desired directory, run the following but be sure to replace

<kinetica-version>with the name of the installed Kinetica version, e.g.,v7.0:git clone -b release/<kinetica-version> --single-branch https://github.com/kineticadb/kinetica-api-python.git

Change directory into the newly downloaded repository:

cd kinetica-api-pythonIn the root directory of the unzipped repository, install the Kinetica API:

sudo python setup.py install

Test the installation (Python 2.7 (or greater) is necessary for running the API example):

python examples/example.py

PyPI¶

The Python package manager, pip, is required to install the API from PyPI.

Install the API:

pip install gpudb --upgrade

Test the installation:

python -c "import gpudb;print('Import Successful')"

If Import Successful is displayed, the API has been installed as is ready for use.

Data File¶

The example script makes reference to a dc_roads.csv data file

in the current directory. This can be updated to point to a valid path on the

host where the file will be located, or the script can be run with the data

file in the current directory.

CSV = "dc_roads.csv"

The dc_shape dataset is a HERE dataset and is analogous to most road network

datasets you could find in that it includes columns for the type of road, the

average speed, the direction of the road, a WKT linestring for its geographic

location, a unique ID integer for the road, and more. The graph used in the

example is created with two columns from the dc_shape dataset:

shape-- a WKT linestring composed of points that make up various streets, roads, highways, alleyways, and footpaths throughout Washington, D.C.direction-- an integer column that conveys information about the directionality of the road, with forward meaning the direction in which the way is drawn in OSM and backward being the opposite direction:0-- a forward one-way road1-- a two-way road2-- a backward one-way road

You'll notice later that the shape column is also part of an inline

calculation for distance as weight during graph creation using

the ST_LENGTH and ST_NPOINTS

geospatial functions.

Script Detail¶

This example is going to demonstrate matching supply trucks to different demand points within Washington, D.C., relying on truck sizes, road weights, and proximity to determine the best path between supply trucks and demands.

Constants¶

Several constants are defined at the beginning of the script:

HOST/PORT-- host and port values for the databaseOPTION_NO_ERROR-- reference to a /clear/table option for ease of use and repeatabilityTABLE_DC-- the name of the table into which the D.C. roads dataset is loadedTABLE_D/TABLE_S-- the names for the tables into which the datasets in this file are loaded.TABLE_Dis a record of each demand's (store) ID, WKT location, size of demand, and their local supplier's ID;TABLE_Sis a record of each supplier's ID, WKT location, truck ID, and truck size.GRAPH_DC-- the D.C. roads graphTABLE_GRAPH_DC_S1/TABLE_GRAPH_DC_S2-- the names for the tables intowhich the solutions are output

HOST = "127.0.0.1"

PORT = "9191"

OPTION_NO_ERROR = {"no_error_if_not_exists": "true"}

TABLE_DC = "dc_roads"

TABLE_D = "demands"

TABLE_S = "supplies"

GRAPH_DC = TABLE_DC + "_graph"

TABLE_GRAPH_DC_S1 = GRAPH_DC + "_solved"

TABLE_GRAPH_DC_S2 = GRAPH_DC + "_solved_w_max_trip"

Table Setup¶

Before the supplies and demands datasets are generated, the D.C. roads dataset is loaded from a local CSV file. First, the type for this table is first defined:

dc_columns = [

["Feature_ID", "long"],

["osm_id", "string", "char16", "nullable"],

["code", "double", "nullable"],

["fclass", "string", "char32", "nullable"],

["name", "string", "char256", "nullable"],

["ref", "string", "char256", "nullable"],

["bidir", "string", "char2", "nullable"],

["maxspeed", "double", "nullable"],

["layer", "double", "nullable"],

["bridge", "string", "char2", "nullable"],

["tunnel", "string", "char2", "nullable"],

["WKT", "string", "wkt"],

["oneway", "int", "int8"],

["weight", "float"]

]

Next, the D.C. roads table is created using the GPUdbTable interface:

table_dc_obj = gpudb.GPUdbTable(

_type=dc_columns,

name=TABLE_DC,

db=kinetica,

options={}

)

Then the records are created from the CSV file and inserted into the table:

dc_data.next() # skip the header

dc_records = []

for record in dc_data:

record_data = [

int(record[0]), # Feature_ID

record[1], # osm_id

float(record[2]), # code

record[3], # fclass

record[4], # name

record[5], # ref

record[6], # bidir

float(record[7]), # maxspeed

float(record[8]), # layer

record[9], # bridge

record[10], # tunnel

record[11], # WKT

int(record[12]), # oneway

float(record[13]) # weight

]

dc_records.append(record_data)

table_dc_obj.insert_records(dc_records)

The demands table type is defined next and the table is created using the GPUdbTable interface:

d_columns = [

["store_id", "int", "int16"],

["store_location", "string", "wkt"],

["demand_size", "int", "int16"],

["supplier_id", "int", "int16"],

["priority", "int", "int16"]

]

table_d_obj = gpudb.GPUdbTable(

_type=d_columns,

name=TABLE_D,

db=kinetica,

options={}

)

Note

There should not be multiple demand records for the same supplier, e.g., two separate demands of 5 and 10 for Store ID 1 should be combined for one demand of 15

Then the demands records are defined and inserted into the table. Six

stores are created in various locations throughout the city with varying

demands but they all use the same supplier. Note that the higher the priority

number, the greater the priority (e.g., 3 is the highest priority in this

example and 0 is the lowest):

d_records = [

[11, "POINT(-77.0297975 38.896235)", 35, 1, 0],

[12, "POINT(-77.0286392 38.9169977)", 15, 1, 0],

[13, "POINT(-77.00128239999999 38.8748973)", 40, 1, 1],

[44, "POINT(-76.97952479999999 38.8995292)", 23, 1, 0],

[45, "POINT(-77.026516 38.9408711)", 28, 1, 2],

[46, "POINT(-77.0254317 38.8848442)", 25, 1, 3]

]

table_d_obj.insert_records(d_records)

Lastly, the supplies table type is defined and the table is created using the GPUdbTable interface:

s_columns = [

["supplier_id", "int", "int16"],

["supplier_location", "string", "wkt"],

["truck_id", "int", "int16"],

["truck_size", "int", "int16"]

]

table_s_obj = gpudb.GPUdbTable(

_type=s_columns,

name=TABLE_S,

db=kinetica,

options={}

)

Five supplies records are defined and inserted into the table: five trucks with varying sizes are assigned to the same supplier.

s_records = [

[1, "POINT(-77.0440586 38.9089819)", 21, 50],

[1, "POINT(-77.0440586 38.9089819)", 22, 50],

[1, "POINT(-77.0440586 38.9089819)", 23, 30],

[1, "POINT(-77.0440586 38.9089819)", 24, 20],

[1, "POINT(-77.0440586 38.9089819)", 25, 16]

]

table_s_obj.insert_records(s_records)

Graph Creation¶

One graph is used for the match graph example utilized in the script:

dc_roads_graph, a graph based on the dc_roads dataset

(one of the CSV files mentioned in Prerequisites).

The dc_roads_graph graph is created with the following

characteristics:

- It is directed because the roads in the graph have directionality (one-way and two-way roads)

- It has no explicitly defined

nodesbecause the example relies on implicit nodes attached to the defined edges - The

edgesare represented using WKT LINESTRINGs in theWKTcolumn of thedc_roadstable (EDGE_WKTLINE). The road segments' directionality is derived from theonewaycolumn of thedc_roadstable (EDGE_DIRECTION). - The

weightsare represented using the cost to travel the segment found in theweightcolumn of thedc_roadstable (WEIGHTS_VALUESPECIFIED). The weights are matched to the edges using the sameWKTcolumn as edges (WEIGHTS_EDGE_WKTLINE) and the sameonewaycolumn as the edge direction (WEIGHTS_EDGE_DIRECTION). - It has no inherent

restrictionsfor any of the nodes or edges in the graph - It will be replaced with this instance of the graph if a graph of the same

name exists (

recreate)

create_dc_graph_response = kinetica.create_graph(

graph_name=GRAPH_DC,

directed_graph=True,

nodes=[],

edges=[

TABLE_DC + ".WKT AS EDGE_WKTLINE",

TABLE_DC + ".oneway AS EDGE_DIRECTION"

],

weights=[

TABLE_DC + ".WKT AS WEIGHTS_EDGE_WKTLINE",

TABLE_DC + ".oneway AS WEIGHTS_EDGE_DIRECTION",

TABLE_DC + ".weight AS WEIGHTS_VALUESPECIFIED"

],

restrictions=[],

options={

"recreate": "true"

}

)

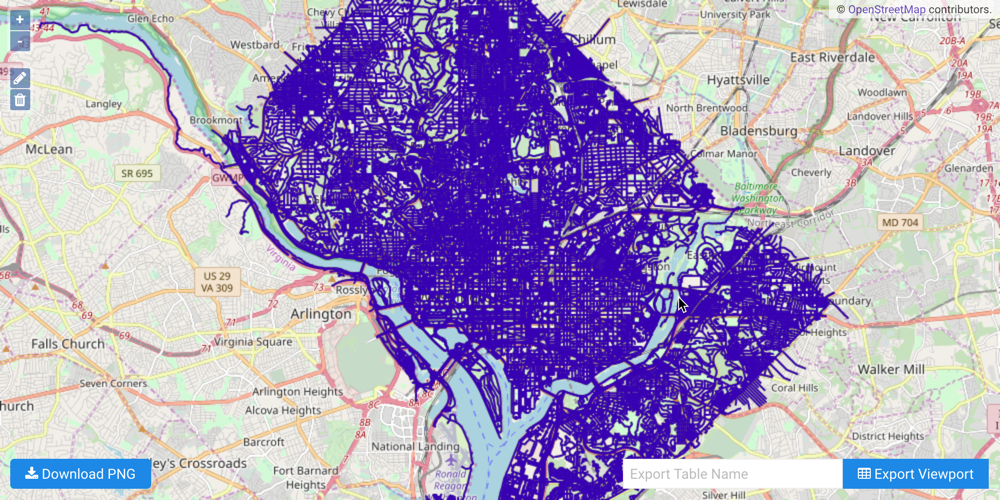

The graph output to WMS:

Multiple Supply Demand with Priority¶

Both the supplies and demands

match identifier combinations are provided to the

/match/graph endpoint so the graph

server can match the supply trucks to the demand locations and with respect to

priority. Using the multiple supply demand match solver results in a solution

table for each unique supplier ID; in this case, one table is created:

dc_roads_graph_solved.

match_graph_dc_resp = kinetica.match_graph(

graph_name=GRAPH_DC,

sample_points=[

TABLE_D + ".store_id AS SAMPLE_DEMAND_ID",

TABLE_D + ".store_location AS SAMPLE_DEMAND_WKTPOINT",

TABLE_D + ".demand_size AS SAMPLE_DEMAND_SIZE",

TABLE_D + ".supplier_id AS SAMPLE_DEMAND_DEPOT_ID",

TABLE_D + ".priority AS SAMPLE_PRIORITY",

"",

TABLE_S + ".supplier_id AS SAMPLE_SUPPLY_DEPOT_ID",

TABLE_S + ".supplier_location AS SAMPLE_SUPPLY_WKTPOINT",

TABLE_S + ".truck_id AS SAMPLE_SUPPLY_TRUCK_ID",

TABLE_S + ".truck_size AS SAMPLE_SUPPLY_TRUCK_SIZE"

],

solve_method="match_supply_demand",

solution_table=TABLE_GRAPH_DC_S1,

options={}

)

The example script utilizes /get/records to extract the results from the solution table:

Truck ID Store IDs Store Drops Cost

=============== =============== ====================== ===============

24 (46) (20.000) 0.348547

25 (46,11) (5.000,11.000) 0.352853

23 (45,12) (28.000,2.000) 0.924494

21 (13,11) (40.000,10.000) 0.710197

22 (12,44,11) (13.000,23.000,14.000) 1.184693

Important

The WKT route was left out of the displayed results for readability.

Solution Analysis¶

For each truck from the supplies table that is involved in the solution, the solution table presents the store(s) visited (by ID), how much "supply" is dropped off at each store, the route taken to the store(s) and back to the supplier, and the cost for the entire route.

Based on the results, you can see that the priority stores' demands were met first: store IDs 46, 45, and 13. Truck ID 24 made a stop at Store 46 and dropped off its entire supply. Once a truck has exhausted its supply in the solution, it is no longer used. Truck ID 25 made a stop at Store ID 46 and 11: it stopped at Store 46 to fulfill the rest of the store's demand and supplied Store 11 with the rest of its available supply. Trucks will drop off as much supply as they are able; if some supply is left on the truck after a drop-off, the truck will continue to the next most optimal drop-off location regardless of priority. Truck ID 23 made a stop at Store ID 45 to fulfill the store's entire demand and Store ID 12 to drop off the rest of its available supply. Truck ID 21 made a stop at Store ID 13 to fulfill the store's entire demand and Store ID 11 to drop off the rest of its available supply. Truck ID 22 made a stop at Store ID 12, 44, and 11 to either fulfill the store's entire demand (44) or to complete the rest of the store's demand (12, 11).

Multiple Supply Demand with Priority and Max Trip Cost¶

Similarly to the example above, both the supplies and demands

match identifier combinations are provided to the

/match/graph endpoint so the graph

server can match the supply trucks to the demand locations and with respect

to priority and the max trip cost. Max trip cost affects this example by

preventing trucks from supplying another store if the cost to travel to

that store is greater than the provided cost. Using the multiple supply demand

match solver results in a solution table for each unique supplier ID; in this

case, one table is created: dc_roads_graph_solved_w_max_trip.

match_graph_dc_resp = kinetica.match_graph(

graph_name=GRAPH_DC,

sample_points=[

TABLE_D + ".store_id AS SAMPLE_DEMAND_ID",

TABLE_D + ".store_location AS SAMPLE_DEMAND_WKTPOINT",

TABLE_D + ".demand_size AS SAMPLE_DEMAND_SIZE",

TABLE_D + ".supplier_id AS SAMPLE_DEMAND_DEPOT_ID",

TABLE_D + ".priority AS SAMPLE_PRIORITY",

"",

TABLE_S + ".supplier_id AS SAMPLE_SUPPLY_DEPOT_ID",

TABLE_S + ".supplier_location AS SAMPLE_SUPPLY_WKTPOINT",

TABLE_S + ".truck_id AS SAMPLE_SUPPLY_TRUCK_ID",

TABLE_S + ".truck_size AS SAMPLE_SUPPLY_TRUCK_SIZE",

],

solve_method="match_supply_demand",

solution_table=TABLE_GRAPH_DC_S2,

options={

"max_trip_cost": "0.6",

"aggregate_output": "false"

}

)

The example script utilizes /get/records again to extract the results from the second solution table:

Truck ID Store IDs Store Drops Cost

=============== =============== =========================== ===============

24 (46) (20.000) 0.348547

21 (46,12,45,11) (5.000,15.000,28.000,2.000) 1.204632

22 (13,11) (40.000,10.000) 0.710197

25 (11) (16.000) 0.211541

23 (11,44) (7.000,23.000) 0.991030

Important

The WKT route was left out of the displayed results for readability.

Download & Run¶

Included below is a complete example containing all the above requests, the data files, and output.

To run the complete sample, ensure the match_graph_dc_multi_supply_demand.py

and dc_roads.csv files are in the same directory (assuming the locations

were not changed in the match_graph_dc_multi_supply_demand.py script);

then switch to that directory and do the following:

If on the Kinetica host:

/opt/gpudb/bin/gpudb_python match_graph_dc_multi_supply_demand.py

If running after using PyPI or GitHub to install the Python API:

python match_graph_dc_multi_supply_demand.py